SemEval Workshop regularly has been conducting tasks in NLP to evaluate the progress in the field.

I and my colleague Ananda Seelan participated in this year SemEval’s Suggestion mining task (Task 9). Here is our submission to be published in NAACL 2019 proceedings, and the code is on github.

This blog is a summary of the key techniques and ideas which influenced this work.

Suggestion Mining Task

The suggestion mining task in brief is a text classification task to find whether a sentence contains a suggestion.

Example,

- Suggestion - It would be nice if they had vegan options.

- Non Suggestion - This restaurant has good vegan options.

About 8k sentences scrapped from technical forumns were provided as training data. The task was divided into two subtasks.

- Subtask A - Evaluation on same domain - technical forums posts.

- Subtask B - Evaluation on out of domain - hotel reviews.

The catch for subtask B is human labelled data in hotel reviews domain is not allowed for training. Our model was placed third place in the leaderboard for Subtask B.

Key Techniques

We used simple convolutional neural networks for text classification. And we applied transfer learning and semi-supervised learning for the tasks.

Transfer Learning

The current trend in machine learning for NLP is to using pre-trained language models. We used google’s recently published BERT model as our representation layer. Take a look at http://jalammar.github.io/illustrated-bert/ for a good description of how pre-trained models work for NLP.

Semi-Supervised Learning

In ACL 2018 conference Melbourne, I attended two talks which impacted the work in this paper. One was Sebastien Ruder’s talk on Strong baselines for semi-supervised learning in NLP. The conclusion of Sebastian Ruder, Barbara Plank (2018) was that classic machine learning techniques for semi-supervised learning such as Tri-Training prove as strong baseline in NLP with neural nets. Sebastien has a very accessible and thorough blog post explaining the techniques. They have also made their code available on github.

We applied a variant of tri-training. We use three models of the same architecture trained initially on data bootstrap sampled from the tech reviews data. The three models are used to iteratively label unlabelled data from hotel reviews domain. Agreement of labels between two models is used as way to select sentences to be added to next iteration of training. Pseudo-code and detailed explanations can be found in the paper. Or you might as well look to the code, as its way simpler than a dry description of it might suggest.

Statistical Significance

The other work published and presented in ACL 2018 that influenced this paper is Rotem Dror’s “The Hitchhiker’s Guide to Statistical Significance in NLP”.

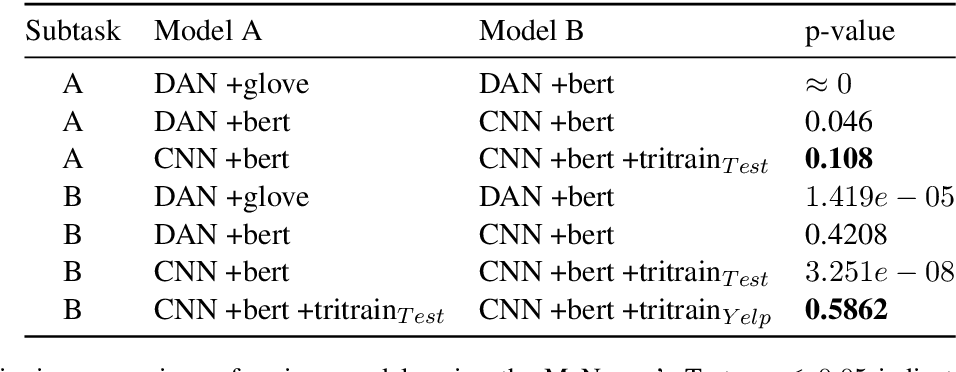

We report confidence intervals for five random seeds for all our experiments. And we also do pair-wise significance testing via McNemar’s test to evaluate whether pair-wise model performance on the test set vary significantly.

Metrics

McNemar’s Test